Machine Learning, Explained

The foundations of Machine Learning can be traced back to the 1940s and 50s, with notable contributions from Claude Shannon and Alan Turing. In the 1960s, Arthur Samuel introduced the concept of machine learning by teaching a computer to play checkers. However, it was only in recent times, with the advent of hardware capable of fully leveraging Machine Learning and the access to immense quantities of data, that we witnessed a remarkable resurgence of AI.

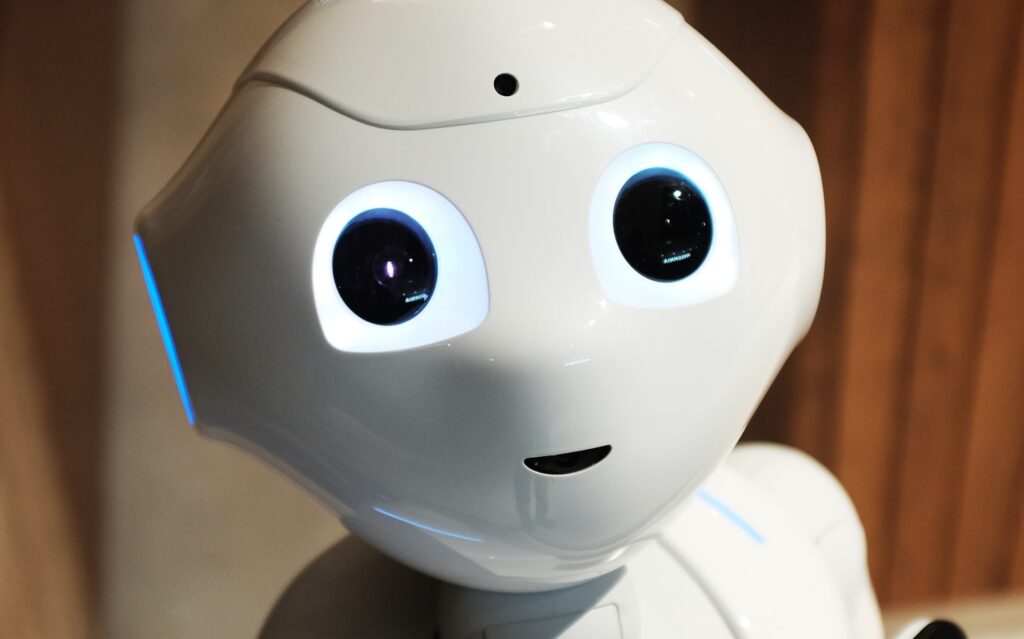

So, what exactly is Machine Learning? It is a method that enables machines to learn and enhance their performance at specific tasks. The machine constructs an internal decision model with a particular goal, such as identifying dogs in pictures. Through practice, the machine improves its decision-making by receiving feedback on if its guess was correct or not. For example, it may initially incorrectly guess that there is no dog in the picture below, but by receiving feedback after its guess that there actually is a dog, it adjusts its decision model to avoid guessing wrong again. After some time of practice, the model will be able to guess mostly correctly when viewing completely new photos it has never seen before. But how does the internal decision model work?

There is definitely a dog in this picture.

It turns out that there are a lot of different models that can be used. However, they all share a common characteristic: taking input(s) and producing output(s). Inputs and outputs can be images, videos, text, or audio. For instance, the model can output the text “yes” or “no” to indicate the presence of a dog in a photo. These models are typically categorized based on how they collect and learn from data, with the common classification being Supervised Learning, Unsupervised Learning, and Reinforcement Learning.

Supervised Learning models require both inputs and corresponding correct outputs from an external (human) labeler (here lies the supervision). For example, if the input consists of pictures and the goal is to determine the presence of a dog, all pictures need to be labeled as either containing a dog or not. Thus, training the model means enabling it to adjust its decision-making process based on the answers. Training is an iterative procedure that, in this case, evolves through minimizing previous classification errors.

Unsupervised Learning models, on the other hand, only require inputs and they then focus on grouping similar inputs together. In the dog picture scenario, the model might group pictures based on similar features, simple ones like color, contrast, and brightness, and more complex ones related to texture, geometry, topology, etc. As a result, dog pictures might have similar properties and be grouped together. If you are not that interested in dogs, think then of how this approach could be useful in analyzing a population of patients and identifying subgroups that could be similar by certain characteristics and therefore benefit from a specific therapy.

Reinforcement learning is a bit different from supervised and unsupervised learning in that we now introduce the concept of an agent. Such an agent tries to maximize rewards or minimize punishment and to do so, it needs to interact with an environment and take multiple decisions, producing eventually a strategy. The agent will only get positive feedback when it achieves its goals.

For instance, let’s say we have a robot equipped with a camera and a motor that is to run over pictures of dogs. The input is now a video from the robot’s camera, and the output is the direction of the movement of the robot. The robot will now get points for each of its actions, so if it runs over a dog picture it gets +10 points, but a cat picture is -10 points. Based on the points it receives, it will change its strategy to maximize the points received.

In summary, although Machine Learning dates back to the 1940s, its full potential could only be realized in recent times thanks to increased computational resources, access to huge amounts of data for training purposes, and a few new or refined artificial neural network architectures. Today, the field of Machine Learning encompasses a diverse range of approaches to problem-solving, yet they all share fundamental characteristics: taking inputs, producing outputs, and continuously improving performance over time.